Contributing to open source has long been on my to do list, I actually get chirped a lot for my love of open source, anyways I recently finally made the leap and commited some code to OpenCV.

I’d long been on the look out for something good to add to OpenCV and then one day it hit me – I’d had this utility function for cv::PCA that I had been using a lot in my own work that would be a real nice addition to OpenCV. Having decided on what I was going to contribute I started reading on how to go about this and admittedly I was pretty confused what actually helped me figure it out was the generic git hub tutorial, I am a bit embarrassed to say but I am still pretty amateurish with source control but I’m getting better!

So I figure out the process and cloned myself a copy of the OpenCV repository and got right to work. It was a pretty simple addition really, what it does is gives another option for how to create a PCA space. Previously your only option was to specify the exact dimension of the space my addition allows the user to specify the percentage of variance the space should keep and then figures out how many principal components to keep thus deciding the dimension of the space.

It works by computing the cumulative energy content for each eigenvector and then using the ratio of a specific vectors cumulative energy over the total energy to determine how many component vectors to retain. You can read more about the specifics on the PCA wikipedia page. Here is the source code:

// compute the cumulative energy content for each eigenvector

Mat g(eigenvalues.size(), ctype);

for(int ig = 0; ig < g.rows; ig++)

{

g.at(ig,0) = 0;

for(int im = 0; im <= ig; im++)

{

g.at(ig,0) += eigenvalues.at(im,0);

}

}

int L;

for(L = 0; L < eigenvalues.rows; L++)

{

double energy = g.at(L, 0) / g.at(g.rows - 1, 0);

if(energy > retainedVariance)

break;

}

L = std::max(2, L);

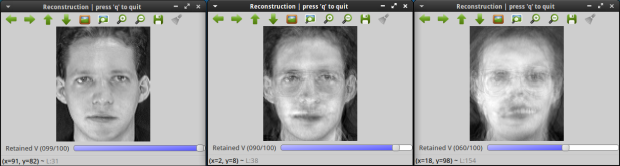

After coding my new feature and I wrote a nifty little sample program (you can find it at OpenCV-2.4.3/samples/cpp/pca.cpp) that adjusted the number of dimensions according to a slider bar and showed the effect on reconstructing a face!

I was all ready to go so I pushed to my git hub account and made my pull request to have the code merged. I received an email back the next day with some feedback – a few of my commit messages were junk, I hadn’t realised that this messages would tag along with my code oops! Git rebase to fix that issue. I also had not provided documentation or added tests for my new code – I didn’t even know about the test module and didn’t realise the I could edit the documentation silly me. I wrote the tests first which was kind of fun actually and pretty straight forward as I was able to modify the existing PCA test. The documentation was a bit more out there - I had never worked with sphinx before but luckily I was able to get by simply looking at how stuff was done.

Pull request #2, this one looks much better much more professional I am pretty proud of it. I wait, I wait I hear nothing, there is a few other pull requests sitting there too. I figure hearing nothing might be good maybe it is because there are no glaring errors and they are carefully reviewing my work. Finally about 2 weeks later pull request accepted! I contributed to OpenCV yay! A bit later on one of the dev’s who I am internet friends with told me to check the OpenCV meeting notes – I got a shout out!

There I am - “a small yet useful extension to PCA algorithm that computes the number of components to retain a specified standard deviate has been integrated via github pull request.”

Also here is a link to the OpenCV page where my documentation can be seen:

http://docs.opencv.org/modules/core/doc/operations_on_arrays.html?highlight=pca#pca-pca